The US presidential election in early November is looking increasingly alarming in the shadow of the pandemic. Concerns about deepfake attacks against democracy, which mislead and confuse voters, are also reigniting. No known major attempted attacks yet. Small White House initiatives, on the other hand, qualify as “cheap fraud.” But it’s not enough to relax. When the Covid-19 pandemic first erupted, the global power, which even had trouble providing enough masks, knows very well that it was just as vulnerable to the threat of deepfake.

If a last-minute deepfake bomb explodes that will manipulate voter preference, it will already be too late. So the United States is on alert. In particular, public organizations, media, technology platforms and universities are vigilant about deepfake. Everyone agrees that deepfake detection models developed based on artificial intelligence (AI) have not yet made a big difference. So, facing this greatest deepfake test in history, it again seems to be up to real human intelligence (RI).

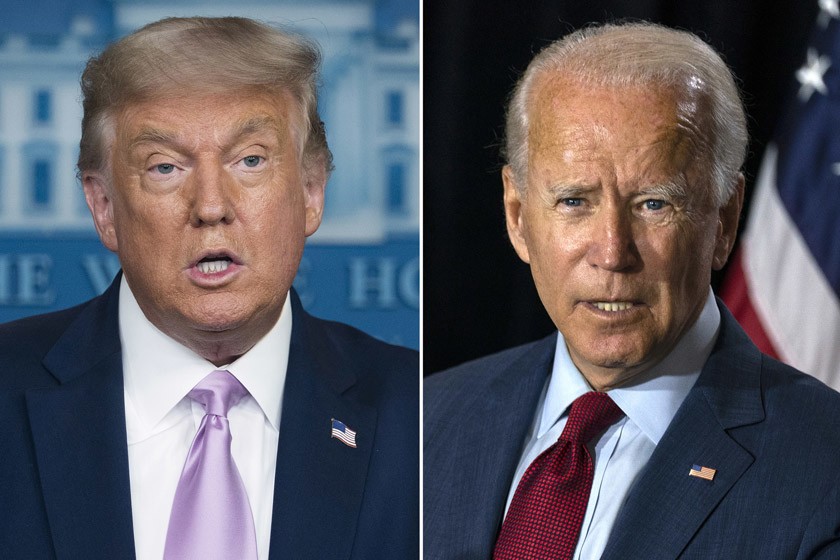

Cheap deepfake jokes of President Trump’s team and Republicans…

An assessment published in the Washington Post on September 11 draws attention to two key issues heading into the US election that have been worrying about turning into a deepfake arena for months. First, less than 40 days before the election, fake synthetic videos targeting Biden, the Democratic nominee, began to appear as examples of low-cost, non-cutting-edge, clichéd deepfakes. But the fact that they are published on the same day by known actors of politics is eerie and dangerous.

The person who posted the manipulated video of Joe Biden appearing to fall asleep in a TV interview on August 31 is none other than Dan Scavino, the White House Director of Social Media. On the same day, Republican Congressman Steve Scalise shared another misleading video that was manipulated to distort Biden’s views on defaming the police. Both posted the deepfake videos on Twitter announcing a massive fight against disinformation ahead of the US election against fake accounts. Twitter has only taken the video Scavino shared off the air, labelling it a “manipulated video.”

It seems that President Trump’s White House and the Republican Party, who have a duty to protect US democracy, do not shy away from releasing deepfake videos that will mislead voters. Although these videos are made with open-source, simple deepfake models that everyone can use, deepfake is too sensitive a topic to fit into the “joke” case before the presidential election. What is eerie and dangerous is that these initiatives, sourced from the US President’s team and party, give tolerance and legitimacy mixed with joke to deepfake against democracy.

If deepfake blemish falls into the results of a pandemic election…

Another important issue that the Washington Post noted about the threat of deepfake is that there will be those who try to cast a shadow on the election, so that the pandemic election process will continue in the period leading up to Oath Ceremony. Because of the pandemic, it is also heavily voted through the mail. Clint Watts of the Foreign Policy Research Institute and Tim Hwang, Program Head of the Cogsec Conference, which focuses on online disinformation operations, argue that the real danger is not before the election, but rather the period after and after the vote. So the deepfake alert won’t end on the night of November 3rd. November and December, by contrast, will be even more intense.

As ballots sent by mail are counted and the winner begins to become clear, efforts to undermine the legitimacy of the election will also be particularly dangerous in this sensitive period. December November October surprise is more likely to be replaced by November or even December surprise, which is the tradition of “October Surprise” before each US election, when there are developments aimed at influencing the results by directing the voter. Malevolent sophisticated actors may be waiting for a moment of post-election uncertainty to start using their most powerful tools, such as deepfake, operationally.

Does a deepfake video ignite the spark of conflict?

Polls in the US already reveal that nearly half of voters do not believe this year’s presidential election will be held fair and honestly. Even more chilling is the concern that controversial results could lead to violent incidents. The poll, conducted by the group “braver Angels” on October 1-2 with about 2 thousand registered voters, found 47 percent of respondents say they believe the election will not be fair and honest. In another poll of 1,505 voters, 56 per cent of respondents said they believed violence would increase because of the results.

President Donald whose Covid – 19 treatment continues has already claimed that there will be fraud in votes that will be used by mail. If Trump loses the election, his supporters will question the legitimacy of the election, and even if Trump wins, the polarization between the two party voters will deepen. Alex Theodoridis, a professor of political science at the University of Massachusetts-Amherst, sees the risk of this polarization turning into violence as possible.

“When I look at America today, I worry that the country is in a dangerous place,” even Biden says in an October 6 speech in Gettysburg, Pennsylvania, one of the most important sites of the American Civil War. For example, isn’t the fate of postal votes or the sudden spread of a fake synthetic video aimed at cheating the count, like a virus, enough to ignite the fuse on this tense ground? Also, at a time when an evacuation was ordered for a racist cop starring in the murder of Black George Floyd, which brought the country and the world to its feet…

Let artificial intelligence produce, real intelligence capture …

It seems that maintaining trust will be critical in the pandemic selection process. The collapse of public confidence in the election result could lead to a major constitutional crisis in the United States. Social media and the Kovid-19 pandemic open the door to a much more dangerous conflict environment than in the past, for a contentious election result.

In the assessment in the Washington Post, which emphasized that it is too early to expect a solution from deepfake detection algorithms during this period, the measures that can be taken are listed under three headings. The first is the development of an early warning system against media manipulation campaigns in collaboration with universities, non-governmental organizations and online platforms. The second proposal is the creation of a nationwide network of “disinformation medics” who will also follow chats on social media. The third proposal is that with social media platforms, Congress implements drastic measures that will discipline all users who apply to deepfake, including elected politicians. As can be seen, no suggestion of technological quality is building. On the contrary, none of them go beyond humanist measures aimed at capturing and preventing what is produced with AI by RI.

Average human intelligence can achieve the most successful algorithm score

Political scientists are looking for a human-centered solution and measure to the deepfake earthquake in a democracy. But how skilled is human intelligence, attention, and intuition to be able to recognize AI-based deepfake technologies? A study published in the Sentry Journal, the publication of the US National Association for Higher Education (NTEU), in early October reveals some very surprising data on this issue. Dr Simon J of the University Of Melbourne in his article published by Cropper describes how this year’s Facebook-led competition, called the deepfake Detection Challenge (DFDC), in which deepfake detection models participate was tested on people.

With models developed by the team identified more than 2 thousand people attended from all over the world DFDC of the competitors in training and testing the code for ‘unknown actors’ specially crafted, both real and fake videos from a data set of more than 124 thousand was used. Developed by Selim Seferbekov, who won the competition, the model was able to distinguish a real video from a fake video in a special test set by only 66%. This indicates that performance may decline further if detection technologies fail to keep up with the rise in quality in deepfake technology.

Dr. J. Cropper says “We think people can do better than that”. This claim, of course, is based on research. The event, held at National Science Week in Victoria, uses the public DFDC dataset. In the short 15-minute event, participants are shown a selection of DFDC videos and asked if they are real or fake. They are also asked to decide how confident they are of their choice and share what they have determined based on the fake videos. The study also uses a longer questionnaire, which includes more videos and personality measurements. More than 700 participants complete the short survey, and more than 100 people complete the longer one. When the results are analyzed, a very interesting unexpected picture emerges.

“Super detector,” is not AI but human

On average, humans do almost as well as their most successful AI-based algorithms at DFDC; thanks to the intelligence (RI) they have, with a hit rate of about 62%. If large differences between individuals do not lower the average, those with the highest scores perform almost perfectly. Moreover, AI-based deepfake detection models, in public test videos, undergo many studies of data to achieve results, while human subjects make decisions by seeing them only once. When subjects are asked the reason for their decision, the unconditional response (UR), i.e. feeling that it is, stands out. It cannot be interpreted whether performance is related to measurable traits of personality. Because it has not yet been determined whether these ‘super detectors’ have strong facial recognition or memory for time and place. But the doctor who carried out the research J. Cropper bases his claim that human intuition (UR) can always defeat AI in this cat-and-mouse game on evidence.

In one of the most powerful platforms of globalization, the World Economic Forum (WEF), Global, dated October 5, agenda warned: assessment on artificial intelligence, “the threat of emerging deepfake this election cycle (U.S. presidential election) may have an unprecedented effect on, and may reveal serious questions about the integrity of our society in general and democratic elections,”. “Given the cross-border nature of the problem, the agenda must be supported by global consensus and action.”

We’ll be watching the US election with another thrill, no matter the time difference, like a space trip by NASA or a World Heavyweight Boxing Championship title match, where we’ve been locked all over the world in the past. Because if the fuse of deepfake-induced global chaos is ignited in the United States, the entire Earth will be shaken.