Anyone with an average intelligence and minimal life experience knows that you should not expose yourself to things that may be dangerous when misused. This applies to medicine or a licensed weapon. Even a very innocent confectionery can lead to deadly consequences when it is not consumed in a healthy or safe way. We place these things in safe places out of the reach of those at risk, and we take measures to prevent them from falling into the wrong hands. We do not use them in a dangerous and wrong way. We know not to put an emergency bag or medicine cabinet in an easily-accessible place.

Due to the COVID-19 pandemic, the digital lifestyle is being pumped into society even more. Artificial intelligence (AI) technologies, such as deepfakes, which are known to pose various dangers for the online world, are shared on the internet for everyone to access and use easily. Then fear is released to the online world. Warnings are issued against disinformation created by fake synthetic sounds and images that are convincing enough to make facts suspicious and blind intelligence. Additionally, global contests are organized to develop deepfake detection models with great expectations and millions of dollars, led by the leaders of the online world such as Facebook and Microsoft. The result obtained after months of waiting is not the success of catching the deepfakes of the developed models. To achieve this success in the future, the existing global R&D will be made available for common use. Fortunately, there are no deepfake production tools that can only be used with malicious intent.

Deepfakes are constantly mutating

Deepfake technologies will undoubtedly have many useful applications in areas such as health, sports, communications, and marketing, as well as other innovation opportunities from AI. This legitimizes the developed deepfake technologies, and so, nobody is in a position to demand that deepfake technologies, which are expected to cause a great threat that cannot be compared with their possible benefits, to be blocked by prohibitive policies. This would be an act against freedom of expression. However, experts agree that it will take time to develop effective detection tools against malicious, dangerous, and harmful deepfake production, and perhaps the war of these algorithms will never end. This is because new technologies, which make deepfakes more believable every day, are constantly being developed in this field. In other words, deepfakes are constantly undergoing technological mutation. Deepfake detection models, on the other hand, are expected to be a miracle drug that quickly ada pts to every new deepfake technology and catches all types of deepfake and prevents their harmful effects.

Mosquito sprays will only work if you remedy swamp

For centuries, in the perfect ecosystem of nature, whose secrets science has been trying to unravel, every being forms an extraordinary part of the sensitive gear. In the life cycle, every creature needs another because it is the only way that natural life can continue. Even blood-bearing mosquitoes, the carriers of deadly infectious diseases, are the food source of many different creatures, from fish species to various birds, bats and spiders. Science advocates that mosquitoes are not a key species for ecological balance. However, instead of destroying this species that pose a danger to humans, it produces dirty streams and swamps in which mosquitos reproduce uncontrollably. Pesticides do not work, and mosquitoes become a great threat to humanity with their deadly diseases.

Science and technology are not as perfect as nature, but they form the most advanced ecosystem that human beings have developed over centuries. Every new scientific finding, every new technology developed is the input and source of information for the next and more advanced system. Like all beings in nature, every invention can have terrible consequences for humanity, to the extent that it could destroy the world when out of control. That is why nuclear, biological, and chemical facilities are privately protected. No-one, unless they are authorized, is even brought closer to these facilities.

Do open-source platforms turn into a deepfake swamp?

As the world goes online, the threats and dangers that target humanity become digital. We almost opened Pandora’s box with these AI technologies that eliminate the limits of human intelligence with the help of algorithms over artificial neural networks produced by modeling the human brain. It seems that deepfake software is no different from dangerous chemicals. Such a perception is imposed on the online community. However, it is clear that deepfake tools are easily accessible from open-source software libraries. This availability speeds up deepfake’s motion and mutation.

New York University (NYU) researchers reveal that free and downloadable open source software is the primary factor in the commodification of deepfake technology. Many of these projects are held and shared on Github. Many project creators even request user donations through Patreon, Paypal, or Bitcoin. Determining that the software is mostly related to face changing and synthetic voice production, the NYU research team emphasizes that most of these tools cater to deepfake professionals rather than amateur developers because they often require programming experience and a powerful graphics processor (GPU). However, some have pointed out that some popular open-source deepfake platforms have increased the speed of amateur developers using these tools, thanks to the published user guides and tutorials of discussion groups.

Microsoft bought GitHub for $7.5 billion in 2018

The research titled “Deepfakes: Uncovering hardcore open sources on GitHub,” published in October 2019 with the signature of Rachael Winter and Anastasia Salter, also highlights the active role of GitHub in the process. The study pointed out that the user who first introduced the concept of “deepfakes” on Reddit.com and whose identity is still unknown today, the first pornographic deepfake samples published in late 2017 have been banned gradually since the beginning of 2018. However, it is argued that the main project is carried out with a series of open-source software shared on the GitHub platform. The study examines the platform role that GitHub plays in spreading deepfakes and questions open-source ethics. As a result, it concluded that the deepfake project has been deliberately spread to areas with limited moderation, with the support of open-source code, thereby maintaining its existence and development. The article titled “Analysis of the commodification of Deepfakes,” publish ed in the NYU Legislation and Public Policy Journal in February by Robert Volkert and Henry Ajder, points to the contribution of open-source platforms. The article states that open-source platforms are the starting point for open commercialization of deepfakes and the increasing speed of circulation. It is argued that deepfake services and market places that have begun to emerge also improve the commodification process.

As deepfake examples and popularity increase, the demand for a better code that creates and enhances such content also increases. Many important tools, such as Faceswap, DeepFaceLab, and Avatarify, which are the cornerstones of the Deepfakes development process, are published in GitHub libraries. Open-source platforms, such as Github or TensorFlow, continue to make significant contributions to developers in developing algorithms and creating easy-to-use mobile applications, despite risks such as potential vulnerability in codes and license incompatibility.

GitHub is an open-source platform that the software world knows very well and applies most. Many companies and software developers use the tools published on GitHub on their desktop, cloud-based software, or mobile application development processes. It was announced on June 4, 2018, that GitHub was sold to technology giant Microsoft for $7.5 billion. Since then, Microsoft has owned the largest platform of open-source software.

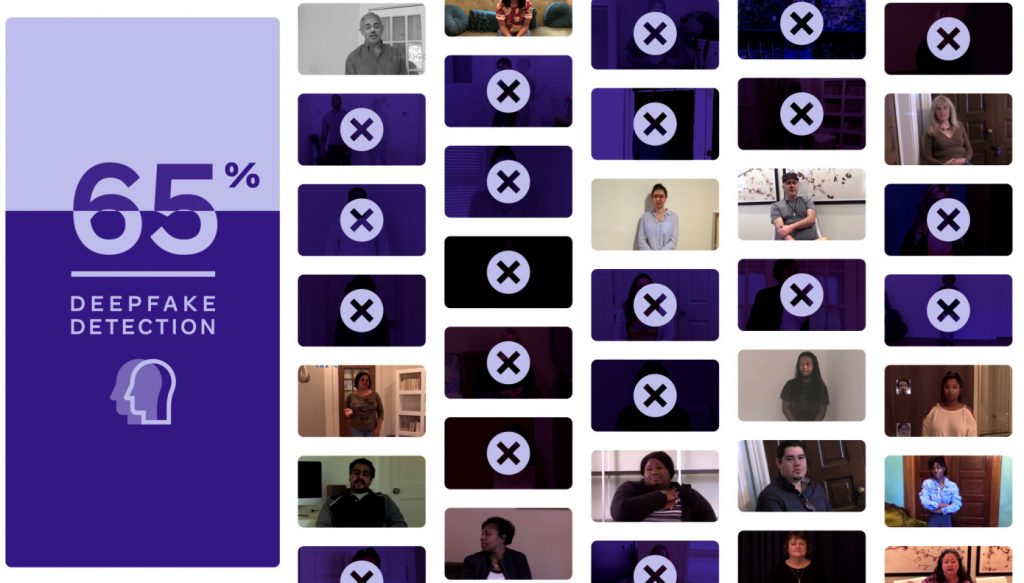

The result is a 65% performance in DFDC. There is hope, but no solution yet.

The results of the Deepfake Detection Challenge (DFDC) supported by Facebook, Microsoft, and Amazon could only be announced on June 12 because of the pandemic. Facebook announced on ai.facebook.com that the best performing deepfake detection model in the first stage of the competition, which was launched with a data set of 115 thousand videos on December 11, achieved an average sensitivity of 82.56%. The model, which completed the final stage in the first place on the previously unpublished closed-circuit data set and was the first in the competition, was able to reach an average performance of only 65.18%. We will evaluate (in another article) the results of the first global deepfake detection model struggle in which 2114 participants competed with more than 35 thousand models. However, the important result is that only two-thirds of the road has been completed for 100% detection of deepfake. But deepfake technologies do not stop and wait. New deepfake tools are constantly being dev eloped. That is why Facebook has decided to share the data set in the final stage, which was created only in 38.5 days with more than 3500 actors, with all the developers. Facebook has announced that it will publish this dataset with open-source code as raw data in order to contribute to the development of new generation AI-based advanced deepfake detection models.

It is hard to close the gap without reforming open-source platforms

In the statement in Facebook’s announcement, DFDC demonstrated the importance of “learning to generalize to unpredictable deepfake examples.” This means that in order to achieve 100% performance of deepfake detection models, achieving dominance over known deepfake technologies is not enough. It is also necessary to design AI models that have intuitive sensitivity to newly developed and not yet developed deepfake tools.

So, those who develop deepfake detection models are expected to be faster than those who developed deepfake tools to gain an edge over the algorithm war before it is too late. For this, don’t they deserve some time and positive discrimination? Isn’t it necessary to tidy up the open-source platforms that provide unconditional and unregulated ammunition and speed up those who develop deepfake tools and produce deepfakes with the help of these that are not known by whom? There is no need for blocking or banning. Even if the sharing of open-source code of deepfake tools is tied to a record and rule, will the speed of the deepfake threat be slowed down? Is it more difficult to maintain the supreme values of personal rights, peace, security, and democracy than to run million-dollar competitions?