Financial information crimes targeting individuals or companies are more focused on stealing victims’ money. However, if the target of cyber attackers is a bank, central bank, or the entire financial system, it is a national security hazard. Synthetic media and its most powerful and convincing weapon, deepfake, can encourage and make more possible large-scale attacks targeting financial infrastructure. In this case, starting with less developed economies, political attacks that can deal major blows to the global free market economy may also be on the agenda.

Let’s take a look at the possible effects of cybercrime and scenarios where starring deepfake is against financial institutions and the financial system.

Bank bankrupting rumors

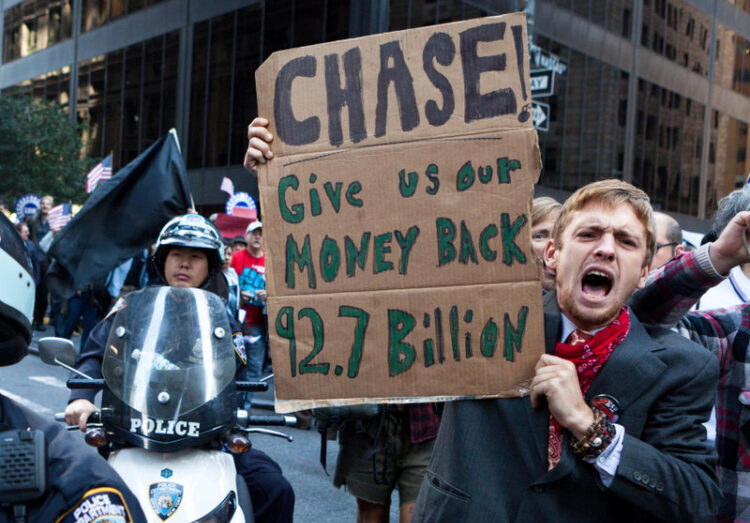

To date, deposit banks in some countries have had problems, stemming in part from rumors of financial weakness on social media. Online rumors about the bank’s negative financial structure, although sometimes very exaggerated, often caused real problems in the banking sector. Social media, traditional media and word-of-mouth marketing provide an effective space for spreading such rumors. When public doubts about a bank’s credibility start to become widespread, social media can quickly grow them.

Determining the sources and basis of bank rumors can be difficult. In 2014, the Bulgarian government accused opposition parties of coordinating a “charge” against the reputation of several banks, but did not name them. Events like this, regardless of their source, offer cyber attackers a template they can resort to in the future.

A synthetic social botnet created by the seizure of thousands of devices could also be used to provoke or intensify the rumors that drive bank accusations. Alternatively, a deepfake video posted on social media could depict a bank executive or government official talking about serious liquidity problems. An effective deepfake-focused cyber-attack on banks would probably occur at a time when a country’s financial system is experiencing problems.

Cyber attackers can also use the deepfake weapon, sometimes with real images. By pairing real videos with false or misleading content, they can ensure that the real video being watched leads to false perception. For example, they can project images of queues in front of branches taken in past years or in a different country as crowds trying to withdraw their deposits from the bank.

Scenarios for suddenly locking markets

On April 23, 2013, a state-backed hacking group called the Syrian Electronic Army stole the Associated Press Twitter account, and then tweeted: “major crisis: two explosions at the White House and Barack Obama injured.” This false claim triggered an instant flood of transactions that has been called “the most active two minutes in stock market history.” Automated trading algorithms made up the bulk of the volume. In just three minutes, the S&P 500 lost $ 136 billion in value, and crude oil prices and Treasury bond yields also fell. But the shock ended as quickly as it began. Markets fully recovered after three minutes.

Cyber criminals with political or financial goals can easily try to use deepfakes to cause shock and chaos that will suddenly lock down markets. For example, a synthetic audio or video recording of Saudi and Russian oil ministers negotiating production quotas can be broadcast over the internet to disrupt oil prices and other exchanges, albeit briefly. These can be quickly refuted, but still countries and leaders, who today face insecurities, may find it difficult to dispel suspicions caused by deep falsehoods. If the shock and chaos that will upset the markets and stock markets is overcome by delay, the bill for the resulting damage becomes very heavy.

A convincing deepfake can undoubtedly cause greater and lasting harm than a few minutes of Twitter account hijacking. Deepfake videos in particular benefit from the “picture superiority effect”, a psychological bias towards being more believable and memorable than other types of content. By aiming to spread misinformation organically through social and traditional media, Deepfakes can eliminate the need to hack into an effective news account to publicize a false claim.

After the Twitter attack of the Syrian Electronic Army, it has not experienced a similar disinformation attack that will manipulate markets in the last 7 years. Market players and observers have become more wary of breaking news. Yet another flash deepfake attack is still possible at any moment. Even a short-term collapse can make attackers profit through well-timed market operations and create lasting psychological effects.

Disinformation attack on Central Bank and public financial regulators

Central banks and financial regulators around the world have been forced to tackle cyber-attacks carried out in the form of online rumors to manipulate markets. In 2019, the central banks of India and Myanmar each tried to quell rumors on social media that certain commercial banks would soon be shut down. In 2010, false claims that the head of China’s central bank had defected spread online, and those claims spooked short-term credit markets. In 2000, US stocks were hit for several hours on false rumors that the Central Bank Governor had been in a car accident.

Deepfakes can be used to create fake audio and video recordings of central bank executives privately discussing future interest rate changes, liquidity issues or currency exchange rate policies. For example, a “leaked” audio clip of a fictitious Central Bank meeting could lead to a perception that officials are worried about inflation and are making plans to raise interest rates. Deepfakes can also victimize central bank governors or financial regulators by their individual identities, for political purposes. A manager of a public company can be shown to be taking bribes from a business person, for example, to stop a corruption investigation.

Community trust credit for the victim determines impact of deepfake

Deepfakes will probably have a greater impact in countries with less reliance on financial surveillance mechanisms, less developed democracies and economies. The trust for victim in the community is critical to effectively debunking a deep fraud. In times of financial crisis, deepfakes can exploit and amplify pre-existing economic fears.

Even in large, stable economies, central banks and financial authorities are often criticized for vague, large-scale or slow public communication. A false government response to an unexpected deepfake could extend the time span in which attackers can cause chaos and profit from short-term speculative trades.

Manipulative synthetic public opinion against financial policies

Astroturf is defined as a masking application performed to make it look as if it is supported by baseline participants, hiding the sponsors of a message or organization. A synthetic public support that acts as a mask can be created for a political structure, advertising, or public relations project. This method, which aims to give credibility to statements or organizations by not providing information about the financial connection of sponsors, leads to a perception of “fake” or “artificial” support, rather than a “real” or “natural” audience of supporters.

Regulators in the field, including those overseeing the financial sector, will increasingly have to deal with covert attempts to manipulate policies, leading to a perception of mass support. For example, the U.S. Securities Exchange Commission and the Consumer Financial Protection Bureau, in public comments about the proposed regulations, have encountered large-scale abuse of online systems.

Content generated by artificial intelligence can make synthetic mass support seem more realistic. Synthetic text-generating algorithms can produce any amount of text on any subject. Astroturfs can use this technique to generate thousands or millions of fake comments opposing or supporting a particular financial arrangement. Comments in this form will look much more convincing and difficult to detect than traditional fake campaigns.

Fake synthetic for $ 100

A Harvard University student successfully tested this approach in 2019. Using previous regulatory comments as training data, he synthesized 1001 comments on a proposed real rule. The comments produced were of high quality and expressed various arguments. People wanted to review both synthetic and factual interpretations, unable to distinguish between them. In particular, the research cost less than $ 100 and was carried out using an “older, everyday model HP laptop” by a college senior and, in his words, a “novice encoder.”

Detection tools are being developed to help distinguish text created by artificial intelligence from text written by humans. Algorithms can be trained to detect the difference. But still, the detection algorithms are not flawless, and a dedicated enemy will resort to every means to evade them. For example, synthetic text producers could be designed to produce more irregular, human-like output.

Even the US is vulnerable to synthetic fake public opinion

Synthetic text detection tools can only contribute if used extensively and widely. Even in a country as cradle of technology as the United States, the fact that institutions have not yet implemented much more basic forms of interpretation verification raises concerns. A 2019 U.S. Senate report found that none of the fourteen agencies surveyed used captchas, or indeed any technology, to verify that those who made public comments were real people. In the Harvard experiment, all 1001 synthetic interpretations were successfully forwarded to the agency. Comments generated by artificial intelligence, before being voluntarily withdrawn, accounted for the majority of the 1,810 comments the agency received.

Synthetic fake manipulative mass support operations are seen as a problem and threat that reduces public confidence in the rule-making process, which can lead to significant legal or political negative consequences. Synthetic tools pose great danger as a powerful new method for digital astrourfing.

None of the scenarios seem to represent yet a serious threat to the stability of the global financial system or national markets in mature, healthy economies. Developed economies are often assumed to be resistant to disinformation campaigns, regardless of the technique used. Before the invention of deepfake, there were situations where manipulative attacks, although rarely, could upset markets. For now, synthetic media is perceived as more likely to cause material damage to targeted people and businesses. However, emerging markets face greater threats from synthetic media. Countries with weaker economies and less trusted institutions have to fight fiscal disinformation more; deepfake could exacerbate that problem. But developed countries will also be more vulnerable when there are international financial crises.