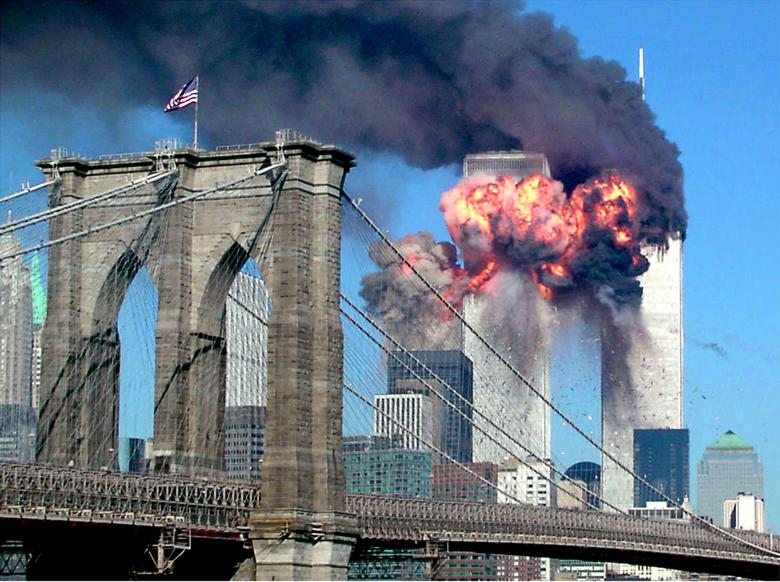

It’s not easy to separate past budget figures into one another. But all the sources reveal that the United States allocates tens of billions of dollars each year to air defense alone. Did all the advanced radar systems, satellite networks, missile shields and fighter jets prevent the tragic September 11 attacks 9 years ago? Unfortunately, no. And that dagger was not hit American cross-border targets, but in the heart of the United States. So, all these investments, technologies have been wasted, are we going to say that it was not necessary?

Terrorist attacks carried out by hijacking passenger planes and flying at a low altitude may have caused a very heavy price. But that air defense system, which could not prevent September 11, has prevented or deterred many more attacks that are difficult to handle over the years. Defensive measures can’t protect you from every danger, but they can be gradually improved. In today’s world, where threats are abundant, walking away from security would be downright suicidal. Do those who govern have the luxury of driving countries, societies and institutions to suicide?

Does a danger that does not occur lose its nature of threat?

The information age, in fact, was heralding the threats facing humanity. We are proving the dystopia that has become reality today with the sources of the past and only reinforcing our conspiracy theories. But in fact, danger knocked on the door, and those responsible ignored it. As scientific sources or experts have warned, “the danger that doesn’t happen is not the threat.” Otherwise, it didn’t take a seer to predict the consequences that could arise when synthetic biology infiltrated the street from laboratories. It was enough to listen to the warnings.

As human beings live their normal lives, we are just realizing today that Covid-19 is a new type of coronavirus, as well as articles, statements, books written and described by its name. Can the fact that the deepfake attacks on the US election have not yet taken place also ensure the usual flow of life outside the pandemic? Will what happens to us tomorrow, when synthetic media attacks occur, be any different from today’s pandemic?

Is it enough to save the day?

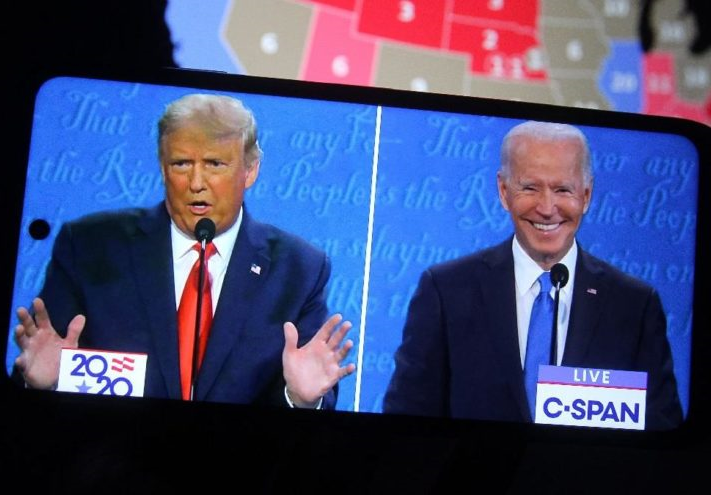

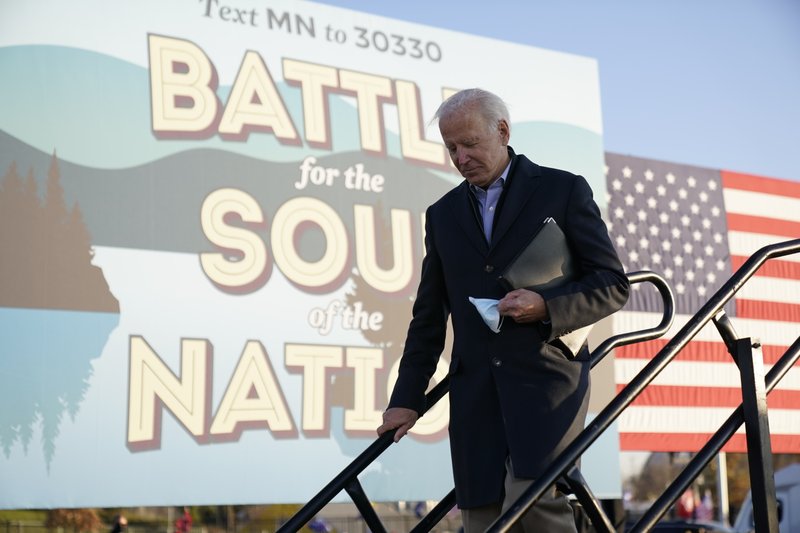

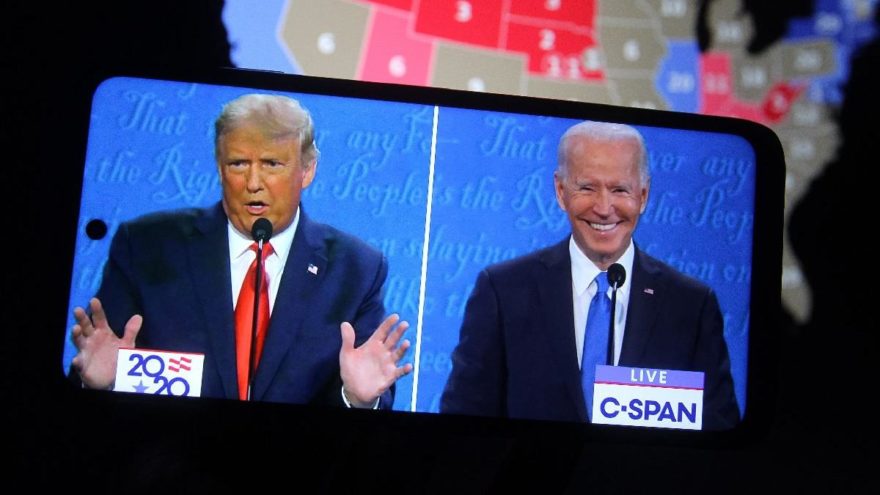

At the U.S. House of Representatives Permanent Select Committee on intelligence, it was argued that deepfake technology could undermine the U.S. election in mid-2019, and social media entities were warned. Before the election, countless speeches were delivered on this issue, statements were given and articles were published. As of November 17, there had not yet been anything that feared. The US election brought many controversies, especially with de facto President Trump accusing President-elect Biden of cheating on the vote. The parties maintain the “I won” claim, it is unclear what will happen until the handover. Many catastrophic scenarios have been written, ranging from violence, inter-party conflict and even civil war, but thankfully none have yet been put on stage.

One of the scenarios that did not appear on stage was the deepfake synthetic media attacks, which were concerned that they would damage democracy through disinformation. Other than a few humorous clips in which Biden was shown mistakenly greeting Floridians as Minnesotans, or appearing to be sticking out his tongue, there was no convincing deepfake provocation. Now one wonders, “Where are the expected deepfake videos aimed at influencing this election?”

“Deepfake has had little, if not no, impact on elections,” Giorgio Patrini, founder of sensitiy, a deepfake detection technology initiative, tells Wired, a US actuality and technology magazine. According to Angie Hayden, director of the AI Foundation, which is testing deepfake detection technology in collaboration with broadcasters and non-governmental organizations, including the BBC, the efforts were only enough to save the day. “It’s nice that your technology saves the day, but it’s going to get better when there’s no need to save the day,” Hayden says.

Attempts disguised at legitimacy

One of the most basic warnings that experts make, especially for deepfake videos, is viral interaction. So after a deepfake video is broadcast and spread, even if you detect it and warn the viewer, the number of people who watch and believe is always greater than those who notice the warning. In terms of political competition, this situation inevitably creates an unbearable attraction. Because even if you’re warning that this is a deepfake video, as the content is posted, along with the content, they’re still affected by the content of fake synthetic media, where people watch an episode. Here is the electoral process in the United States, which has also been the scene of attempts that seem legitimate of this kind.

For Example, Florida, 1st District congressional candidate Phil Ehr featured deepfake footage in his ad campaign of his opponent, incumbent Republican Matt Gaetz, saying words like “Fox News sucks” and “Obama is much cooler than me.” “If our campaign can make a video like this, think about what Putin is doing right now,” said Ehr, who accused his opponent of being insensitive to disinformation. But this sophisticated campaign failed to prevent EHR’s election defeat.

Other leaders have not stopped being part of the competition in the US presidential election process with deepfake videos prepared in 2020. In the ad video, which highlights how a divided us would destroy trust in democracy and warns against deepfake, North Korean leader Kim Jong-un said, “Democracy is not difficult to collapse. All you have to do is do nothing,” he said. A similar deepfake advertising video was also made for Putin, the Russian leader. Thus, an attempt was made to strengthen the awareness of the US voter against possible outsourcing dangers.

Facebook and Twitter’s deepfake totem

Especially fanatical fans, when things go good or bad for their teams in difficult matches, change their seat or engage in other behavior that they believe will bring good luck. It is believed that this superstition, known as Totem making, will eliminate the negativity that is feared or realized. The deepfake silence of social media giants Facebook and Twitter on the tensest days of the US election is also giving the impression that they are making totems in this area.

Global social media platforms, worried that they will be used to deepfake initiatives, seem to have been left out of this process without getting into trouble as of day. Facebook and Twitter, which added deepfake-related rules to their content audit policies in early 2020, may be considered to have contributed to the election being circumvented without the deepfake crash. Because from the point of view of content control, this sensitive period does not look like a very quiet past for them. Last week, Twitter’s blog post on the completion of the election process said a “misleading content” warning had been added to 300,000 tweets after October 27, a figure of 0.2% of all election-related posts. But deepfake was never mentioned. Facebook also declined to respond to Wired’s request for comment in this area.

Every day can be that day…

Danielle Citron, who warned Congress about deepfakes last year when she was a law professor at the University of Maryland and is, now at Boston University, says it’s too early to declare victory. Anyone interested in the subject knows that deepfake technology has become cheap and easy to access. There are now many opportunities to abuse Deepfake technology. “Thanks to an information ecosystem full of disinformation, our country has two realities,” Citron says, “and every day it offers new opportunities to do harm.”

Gary Grossman, Edelman’s Senior Vice President of Technology Practice and global leader of the Edelman Artificial Intelligence Center of Excellence, providing communications consulting support to brands with 60 offices and 6000 employees worldwide. Grossman points out in his article, published on November 1 on the American technology news site VentureBeat (VB) that as of June this year, about 50,000 deepfakes had been detected. He stresses that this means an increase of more than 330% in a year.

The paper emphasized remarks of retired Major General Brett Williams who served as director of U.S. Cyber Command from 2012 to June 2014 at a cyber conference some time ago. “Artificial intelligence is a real thing,” said Williams, a former director of U.S. Cyber Command. “It’s already being used by attackers. When they learn to do deepfake, I would argue that it’s a potentially, existential threat,” he says. Based on these words, Grosmann argues that the fact that this choice seems to have survived without a deepfake attack can be explained by the fact that the technology is not yet mature enough.

The Center for security and Emerging Technologies’ report, titled “Deepfakes: a fundamental threat assessment”, published in July, once again reveals that the danger will grow further in the future. As a result, the claim expressed is that even if the deepfakes did not play a significant role in this election, it is only a matter of time before it affects the elections, subverts democracy, and perhaps leads to military conflicts.

When the danger happens, who will be responsible?

Going back to the beginning of the article… we are facing a threat that no public and private sector manager, media, bureaucracy and corporate decision makers can say, “There is no deepfake attack yet, we will look at the danger.”

Deepfake detection technologies may not have reached a 100% detection and security level for any developer in the world. But it should not be forgotten that every project aimed at media content security, especially in the pandemic conditions of online communication, is of great value in terms of fending off some of the dangers and deterrence.

Aren’t management and leadership actually having a vision?