The reason synthetic media is becoming an increasingly big online threat is not yet because of cyber-attacks carried out in this way. The growing danger is that the conditions for these attacks are becoming more and more appropriate or introduced at a great speed. In other words, easily accessible advances in deepfake technologies almost nudge those who can use them with bad intentions. It offers them ready, comfortable, easy opportunities under different legitimate covers. All that remains is to combine artificial intelligence (AI) with demonic intelligence in terms of how the offered deepfake synthetic audio and video generation capabilities can be used for attack, fraud or deceit.

Every deadly danger, for those who hold that danger, also becomes a great power. Therefore, especially such jarring technologies, which can always be seen as legitimate and even supported, are justified for positive purposes. Humanity understood how nuclear power could turn into an inhuman weapon, with paying a heavy price for decades with the atomic bomb. Biological weapons, the work of synthetic biology, are increasingly becoming the most out-of-control means of hate crime. Now, synthetic media? Isn’t synthetic media, which we like today, which we find useful, which manipulates our senses and perceptions with illusion, going to turn into a weapon that others are using against us tomorrow? So, can we continue to ignore it and find it fun again?

Synthetic media technologies are evolving adrift

We see that the covid-19 pandemic has forced business and social life to be digitized all over the world, and that life is increasingly taking on an online structure. Before the pandemic, online communication and digital media, already with the magnetic gravity of social media, were increasingly imprisoning life on digital screens. In the aftermath of the pandemic, the digitization of everything from shopping to business meetings, education to conversation and even politics became the usual flow of life. The fact that synthetic media, like a new type of coronavirus, has not yet been transmitted to everyone does not mean that a major outbreak in this area will not occur at any time. But in the process of synthetizing biology, just as the deadly threat was ignored before it moved from laboratories to the street, synthetic media technologies are developing so adrift.

Danger of videoconferencing appear…

During the pandemic, it was known that the irrepressible rise of video conferencing applications, especially Zoom, created a favorable climate for deepfake attacks. Indeed, attacks by hacking online video conferencing sessions, as intruders, victimizing participants, and so-called Zoombombing, have not been delayed. Real-time deepfake technology was also tried for the first time in the process, taking advantage of the influence of Elon Musk’s profile, even if it was not intended for attack purposes.

A programmer named Ali Aliev demonstrated the method he developed about 6 months ago to create deepfakes in real time in Zoom. To test out the Aliev project, celebrity tech billionaire Elon Musk ‘accidentally’ walked into an online meeting with his deepfake, which he was not invited to. He thus became the hero of the most vocal Zoombombing. Judging by the reactions, a real-time deepfake can be convincing enough in Zoom format due to common resolution and frame rate drops. And the bewildered looks on the faces of unannounced participants prove that Aliev has achieved his goal.

This technology is called Avatarify. It was developed using open source AI code called “first degree motion model for image animation”, produced by researchers at the University of Trento in Italy. Avatarify overlaps another person’s face in real time. Avatarify works without a hitch while streaming. However, with its processor strong, for example a world-class gaming computer, at least a basic infrastructure about programming and AI is needed.

For those who want to try it out, Avatarify, accessible from GitHub, offers its users world-famous models listed as Albert Einstein, Eminem, Steve Jobs, Mona Lisa, Barack Obama, Harry Potter and Ronaldo. Also, according to its developer, it is not necessary to train the model. You just need to put the pictures in the folder. In other words, for those who have a certain intention and goal, good or bad, are no strangers to technology, have enough time, ambition, equipment; it is not impossible to manipulate a live video conference call with a synthetic profile. Would you find it amusing if it happened to you?

Deepfake will now also improve video conferencing quality

Until 6 months ago, there was concern that deepfakes would take advantage of the handicaps of video conferencing technology, turning it into a security weakness for online conversations and meetings. Deepfake technologies, after a short break, now appear in this area in an even more confusing form. It promises online users a better quality video conversation that will make them feel better and save money.

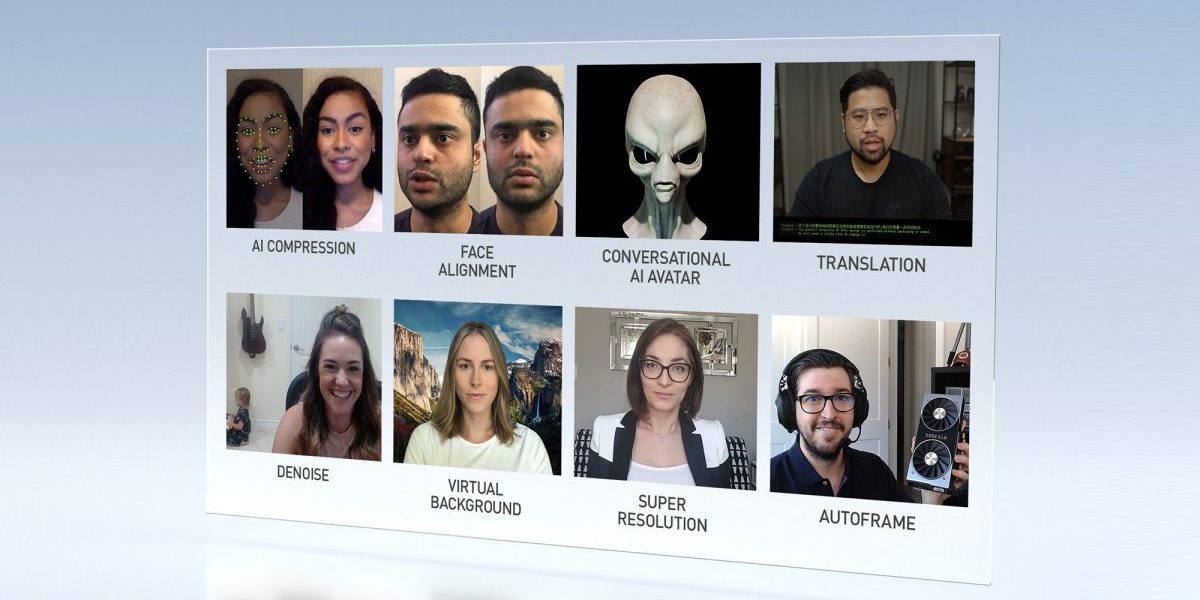

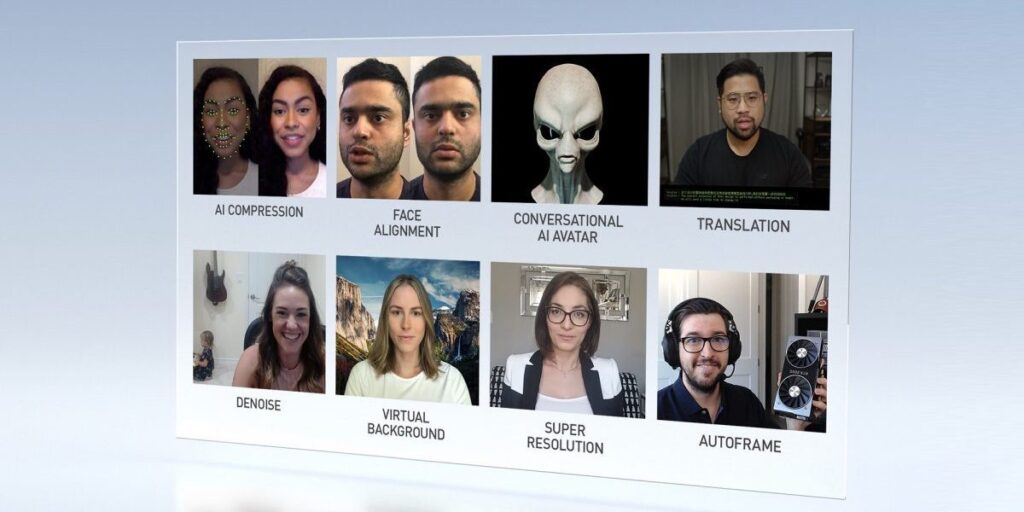

Nvidia, the technology company that produces video cards, has introduced its new product, Maxine, to the digital ecosystem. Although not aimed directly at video conferencing end users, Maxine, a new AI technology that video conferencing platforms will use in the background, looks like a new breakthrough in online video calls.

Bandwidth savings, the most attractive carrot

Maxine offers a cloud-based, AI-powered software development kit designed for video conferencing service developers. With this new system, it is said that bandwidth usage for video in video conference calls will decrease to one-tenth. This is, of course, a bonus that drives down costs. In particular, it offers significant savings to end users, given the limited circumstances of internet quotas. This feature is also important for providing high resolution at low bandwidths.

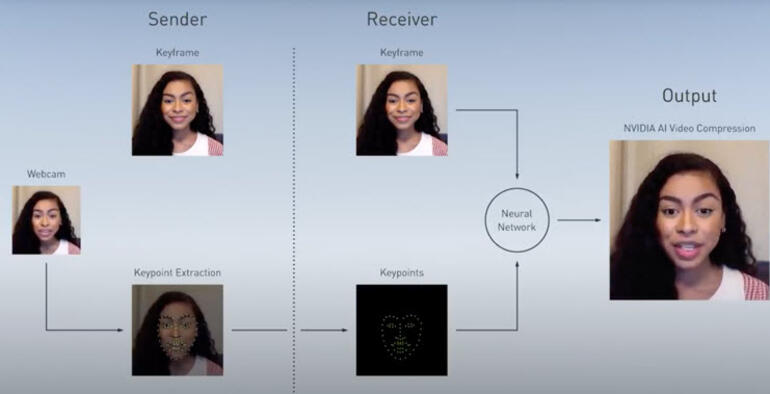

In a sense, this system works like Avatarify, which performs real-time deepfake animation. As with other video conferencing systems, at first a reference image is sent to the opposite side. As with existing applications, image pixels that use most of the bandwidth are not sent. Instead, the basic movements of the facial expression are detected and the reference image sent at the beginning is animated. In a sense, a new animation is created with the photo taken, or the real image of the person is turned into a deepfake avatar.

As bandwidth decreases, resolution and visual contact increase

Another promised solution to the bandwidth problem with Maxine is to increase the resolution of low-quality videos with artificial intelligence. Because the internet speed is not good, a user with a resolution of 360p can send this image to the opposite side of the cloud-based AI to 720p by increasing the quality of the image with a higher quality.

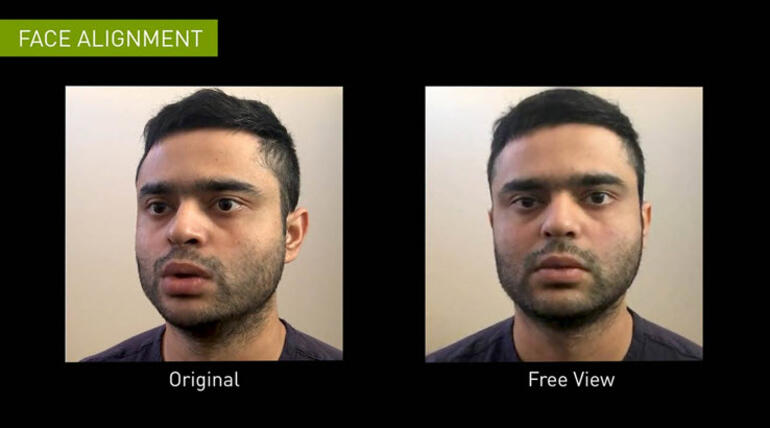

Maxine allows videoconferencing users to eliminate the problem of eye contact. Because of the intensity caused by other participants and presentations, constantly looking at the screen instead of the video camera leads to angle problems in the video conference image of each participant. Because the user’s eye and direction mostly do not match the camera, an image appears as if he is looking away, up, down, and not at other people. Thanks to the feature offered to solve this situation, the user’s face is rotated using an artificial intelligence algorithm, making it look like they are looking at others. Face re-lighting improves image quality, while background sounds are dimmed, improving sound quality.

Should we be happy with development or afraid of danger?

You’ll say, so what? Online video chat has already become a mandatory form of communication. How good, the problem of remote communication, when bandwidth was not enough, video and audio quality was often added to the problems such as falling off the line. Thanks to the reduction or disappearance of technical problems, we will be able to concentrate more on communication.

But Maxine’s cosmetic features, which manipulate the naturalness of the image and audio stream in the video conference, raise some naturally disturbing questions about the negative and alarming effect AI has on deepfake reflection.

Maxine uses AI models called manufacturer rival networks (GAN) to change faces on video feeds. The best performing GANs are able to create, for example, realistic portraits of non-existent people or snapshot synthetic images of fictional objects. Can’t those who maliciously attempt to use Maxine improve the lighting in a video feed and misleadingly rearrange frames in real time?

A bias in computer vision algorithms that reveal orientation to black skin color can disturb people with darker skin, such as Zoom’s virtual backgrounds and Twitter’s automatic photo cropping tool. Nvidia did not detail the datasets or AI model training techniques it used to develop Maxine. But is there any guarantee that the video conferencing platform that will use this technology can manipulate black faces as effectively as light-skinned faces?

Is digital makeup good for the soul?

Beyond the problem of racial bias is the fact that facial enhancement algorithms aren’t always mentally healthy. I mean, another situation as dangerous as deceiving others is that a person can deceive himself while doing so.

How this danger can affect the psychology of the individual and society, let’s leave it to another future article. But the threat of deepfake is getting to the point where it can insidiously damage even our own existential reality. Do you realize that?